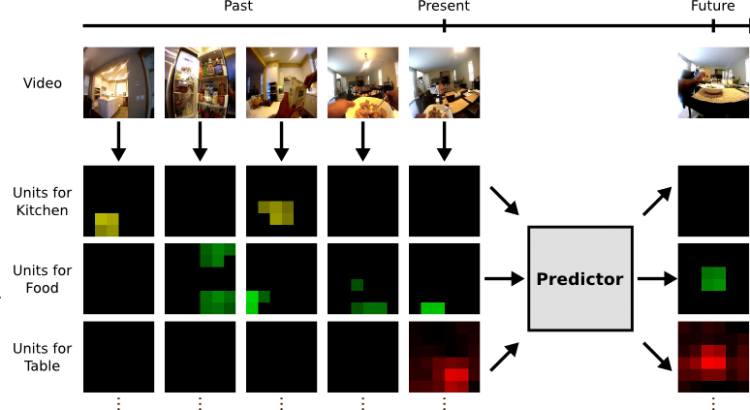

In many computer vision applications, machines will need to reason beyond the present, and predict the future. This task is challenging because it requires leveraging extensive commonsense knowledge of the world that is difficult to write down. We believe that a promising resource for efficiently obtaining this knowledge is through the massive amounts of readily available unlabeled video. In this paper, we present a large scale framework that capitalizes on temporal structure in unlabeled video to learn to anticipate both actions and objects in the future. The key idea behind our approach is that we can train deep networks to predict the visual representation of images in the future. We experimentally validate this idea on two challenging “in the wild” video datasets, and our results suggest that learning with unlabeled videos significantly helps forecast actions and anticipate objects.

Etiket: Carl Vondrick

Makale: Visualizing Object Detection Features

We introduce algorithms to visualize feature spaces used by object detectors. The tools in this paper allow a human to put on “HOG goggles” and perceive the visual world as a HOG based object detector sees it. We found that these visualizations allow us to analyze object detection systems in new ways and gain new insight into the detector’s failures. For example, when we visualize the features for high scoring false alarms, we discovered that, although they are clearly wrong in image space, they do look deceptively similar to true positives in feature space. This result suggests that many of these false alarms are caused by our choice of feature space, and indicates that creating a better learning algorithm or building bigger datasets is unlikely to correct these errors. By visualizing feature spaces, we can gain a more intuitive understanding of our detection systems.