This paper proposes Fast R-CNN, a clean and fast framework for object detection. Compared to traditional R-CNN, and its accelerated version SPPnet, Fast R-CNN trains networks using a multi-task loss in a single training stage. The multi-task loss simplifies learning and improves detection accuracy. Unlike SPPnet, all network layers can be updated during fine-tuning. We show that this difference has practical ramifications for very deep networks, such as VGG16, where mAP suffers when only the fully-connected layers are updated. Compared to “slow” R-CNN, Fast R-CNN is 9x faster at training VGG16 for detection, 213x faster at test-time, and achieves a significantly higher mAP on PASCAL VOC 2012. Compared to SPPnet, Fast R-CNN trains VGG16 3x faster, tests 10x faster, and is more accurate. Fast R-CNN is implemented in Python and C++ and is available under the open-source MIT License at this https URL

Kategori: Makaleler

Makale: Real-Time Pedestrian Detection With Deep Networks Cascades

We present a new real-time approach to object detection that exploits the efficiency of cascade classifiers with the accuracy of deep neural networks. Deep networks have been shown to excel at classification tasks, and their ability to operate on raw pixel input without the need to design special features is very appealing. However, deep nets are notoriously slow at inference time. In this paper, we propose an approach that cascades deep nets and fast features, that is both extremely fast and extremely accurate. We apply it to the challenging task of pedestrian detection. Our algorithm runs in real-time at 15 frames per second. The resulting approach achieves a 26.2% average miss rate on the Caltech Pedestrian detection benchmark, which is competitive with the very best reported results. It is the first work we are aware of that achieves extremely high accuracy while running in real-time.

Makale: Dropout: A Simple Way to Prevent Neural Networks from Overfitting

Deep neural nets with a large number of parameters are very powerful machine learning systems. However, overfitting is a serious problem in such networks. Large networks are also slow to use, making it difficult to deal with overfitting by combining the predictions of many different large neural nets at test time. Dropout is a technique for addressing this problem. The key idea is to randomly drop units (along with their connections) from the neural network during training. This prevents units from co-adapting too much. During training, dropout samples from an exponential number of different “thinned” networks. At test time, it is easy to approximate the effect of averaging the predictions of all these thinned networks by simply using a single unthinned network that has smaller weights. This significantly reduces overfitting and gives major improvements over other regularization methods. We show that dropout improves the performance of neural networks on supervised learning tasks in vision, speech recognition, document classification and computational biology, obtaining state-of-the-art results on many benchmark data sets.

Makale: Distilling the Knowledge in a Neural Network

A very simple way to improve the performance of almost any machine learning algorithm is to train many different models on the same data and then to average their predictions. Unfortunately, making predictions using a whole ensemble of models is cumbersome and may be too computationally expensive to allow deployment to a large number of users, especially if the individual models are large neural nets. Caruana and his collaborators have shown that it is possible to compress the knowledge in an ensemble into a single model which is much easier to deploy and we develop this approach further using a different compression technique. We achieve some surprising results on MNIST and we show that we can significantly improve the acoustic model of a heavily used commercial system by distilling the knowledge in an ensemble of models into a single model. We also introduce a new type of ensemble composed of one or more full models and many specialist models which learn to distinguish fine-grained classes that the full models confuse. Unlike a mixture of experts, these specialist models can be trained rapidly and in parallel.

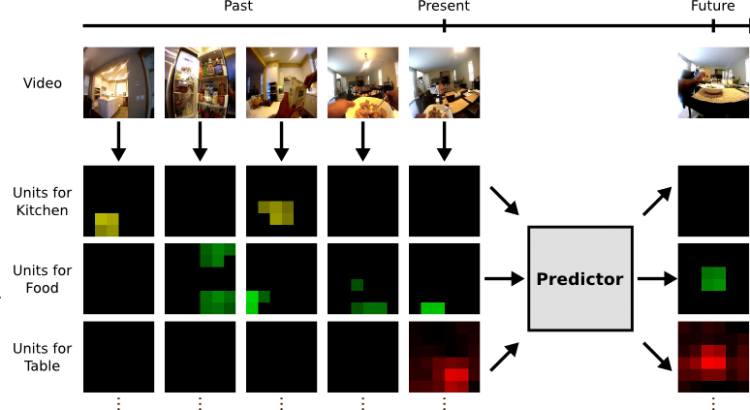

Makale: Anticipating the Future by Watching Unlabeled Video

In many computer vision applications, machines will need to reason beyond the present, and predict the future. This task is challenging because it requires leveraging extensive commonsense knowledge of the world that is difficult to write down. We believe that a promising resource for efficiently obtaining this knowledge is through the massive amounts of readily available unlabeled video. In this paper, we present a large scale framework that capitalizes on temporal structure in unlabeled video to learn to anticipate both actions and objects in the future. The key idea behind our approach is that we can train deep networks to predict the visual representation of images in the future. We experimentally validate this idea on two challenging “in the wild” video datasets, and our results suggest that learning with unlabeled videos significantly helps forecast actions and anticipate objects.