İlgili Makale: Deep Visual-Semantic Alignments for Generating Image Descriptions

Everything you wanted to know about ILSVRC: data collection, results, trends over the years, current computer vision accuracy, even a stab at computer vision vs. human vision accuracy — all here!

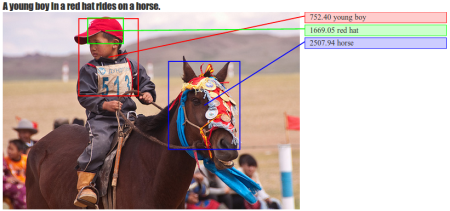

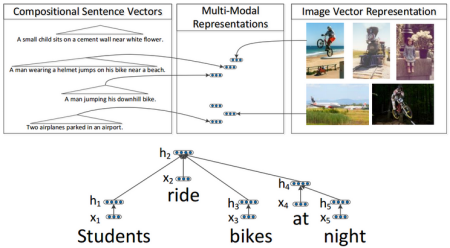

We train a multi-modal embedding to associate fragments of images (objects) and sentences (noun and verb phrases) with a structured, max-margin objective. Our model enables efficient and interpretible retrieval of images from sentence descriptions (and vice versa).

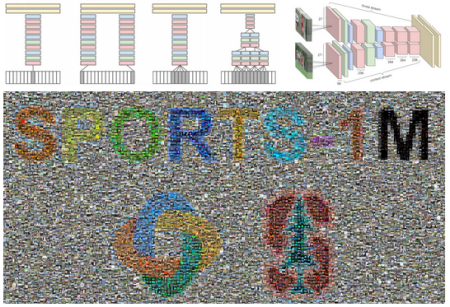

We introduce Sports-1M: a dataset of 1.1 million YouTube videos with 487 classes of Sport. This dataset allowed us to train large Convolutional Neural Networks that learn spatio-temporal features from video rather than single, static images.

Our model learns to associate images and sentences in a common We use a Recursive Neural Network to compute representation for sentences and a Convolutional Neural Network for images. We then learn a model that associates images and sentences through a structured, max-margin objective.